Robots and artists have been combining forces for some years now. But the latest upsurge in generative AI opens even more doors… such as with the Leonardo project.

Over the years, RoboDK has been involved in various exciting robotic art projects. From giant musician sculptures for streaming platform Spotify to filmmaking robots for video advertising and even lights out robot painting or robot created 3D printed food art, artists love our platform.

One of the biggest debates right now in the world of art technology is generative artificial intelligence (AI). Some artists are skeptical about the rise of AI art tools while others are embracing this new technology as simply another brush in their art kit.

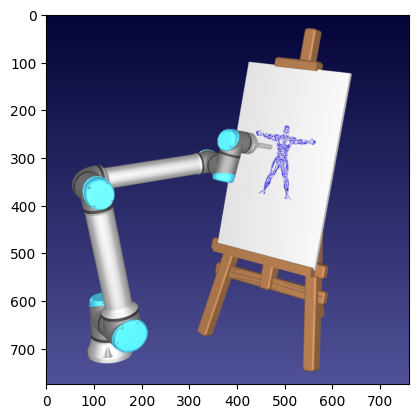

Leonardo is a project that aims to combine the power of generative AI with the physical flexibility of robotics.

Heres how the project uses RoboDK to make their vision come to life…

The Fusion of Creativity and Automation

The RoboDK community is filled with highly creative roboticists. One RoboDK user recently posted in our user forum about a new direction that they had been investigating.

They said:

Lately I’ve been playing with ideas that mix generative AI and robotics. I wanted to share this project that uses RoboDK to make drawings using a natural language prompt input.

They explored this question by creating the Leonardo project.

Robotic art is currently very much in the spotlight. Major news outlets often report on robotic art projects including robot sculptors and artistic painting robots.

Artistic expression is a quality that seems very human. Emotion and subjective interpretation play a pivotal role in artistic creations. However, the Leonardo project aims to explore the boundary between human creativity and machine precision by creating a drawing robot that can generate and execute drawings based on natural language inputs.

Understanding Leonardo’s Core Components

The functionality of the Leonardo project all begins with the natural language processing. Through OpenAI and DALL-E the system parses the artist’s inputs to generate an image, which the RoboDK-powered robotic system can then turn into a physical image.

Here is how some of the core components of the system combine:

- Audio Interface — The system has a voice module that allows the artist to speak into the system. This is then converted into text using a speech-to-text transcription module.

- Chat GPT — At the start of the Leonardo process is ChatGPT, probably the most famous generative AI system in the world right now. Through this conversational AI, the system captures the essence of the artist’s input and processes it into a format that can be generated into a usable image.

- Open AI’s DALL-E — DALL-E then creates a visual image based on the processed text input. This AI model creates an original, high-quality image that acts as a blueprint for the robot art.

- Octoprint — If the user opts for a 3D printing system, the output image can be sent to the Octoprint interface.

- RoboDK — For full robotic integration, the image is sent via RoboDK’s powerful API. This then creates a robot program that will work with your specific robot model.

Together, these components allow artists to enter a description of their desired image into the system using natural language. The Leonardo system then turns this into a usable robot program.

You can find out more in the project’s Github repository.

7 Steps to AI Generated Robot Art

How can you create AI generated robot art using the Leonardo system? The functionality of the system is carried out by a script.

Here are 7 steps that you follow to generate your own robot produced art:

- Initiation — The system plays an audio prompt to ask the user what they would like the robot to draw.

- Transcription — The artist then describes their proposed image out loud, and this is automatically transcribed to create a text input.

- Art Generation — Using the software components described above, the system then processes this text input and uses it to generate a visual image.

- Edge Tracing — The generated digital art then undergoes a process where the edges are traced into an outline of the essential shapes and figures. These lines will be turned into paths that the robot can draw.

- Scaling — The traced image is then scaled to ensure that it fits perfectly into the canvas size (and robot’s workspace) without losing the integrity of the original design.

- G-code Generation — Using RoboDK, the contours of the image are then converted into G-code that will guide the robot’s path throughout the drawing task.

- Robot Drawing — Finally, the robot uses its drawing tool to create the physical drawing of the generated artwork.

This process is all carried out automatically by the components of the Leonardo script. The various stages processing steps intend that the art produced will be as close to the artist’s spoken prompt as possible, while also being achievable by the robot’s physical limitations.

The Impact of Generative AI on Artists

What will be the impact of projects like Leonardo on the work of artists?

The project doesn’t aim to replace artists, but rather examines the relationship between human spoken descriptions of art and the exciting possibilities of generative AI.

The words spoken by the artist at the very start of the process will have a huge impact on the resulting artwork. In this way, the artist is as much a creator of the art as they would be using any other artistic medium.

With so many artists already using RoboDK, it seems likely that we will see even more of this type of project appearing soon!

What do you think are the possibilities and limitations of AI generated robotic art? Join the discussion on LinkedIn, Twitter, Facebook, Instagram, or in the RoboDK Forum.. Also, check out our extensive video collection and subscribe to the RoboDK YouTube Channel