In the fast-paced industry of robotics and automation, time is of the essence. With the rise of more powerful Artificial Intelligence (AI) models, it is now possible to create virtual assistants capable of retrieving relevant information from large databases, conversing with users, and even creating robot programs. The new RoboDK Virtual Assistant hopes to leverage these capabilities to provide users with a new tool to save some time and assist with more complex tasks.

The most modern AI-powered virtual assistants leverage deep learning techniques and extremely large data sets in an algorithm known as a Large Language Model (LLM). Despite some current limitations, LLMs are revolutionizing the way that we all interact with computers, with the most prominent recent examples being OpenAI’s ChatGPT and Meta’s Llama 2.

Let’s dive a little deeper into the world of Large Language Models and explore how they function, the challenges they currently face, and the future possibilities they present. As a bonus, we’ll discuss how we created the RoboDK Virtual Assistant and what we envision on the horizon.

Challenges and Future Possibilities of Large Language Models (LLMs)

Large Language Models are cutting-edge AI models that possess the remarkable ability to understand and generate human-like text. At their core, large language models function as finely tuned mathematical functions, trained to predict the next word or piece of text given the preceding context. Each word or token in the input text is converted into a numerical representation, and these representations are assigned weights and biases. Through techniques like backpropagation, neural networks adjust these parameters based on the discrepancies between their predictions and the actual next words in the training data. The process of training involves iteratively fine-tuning these weights and biases to minimize the overall prediction error, resulting in an unimaginably complex function that can generate coherent and contextually accurate responses. Advances in computing technology have enabled us to increase the size of these models. In the case of GPT-4, the function consists of approximately 1.76 trillion parameters.

Sebastien Bubeck, Sr. Principal Research Manager at Microsoft Research, says:

Beware of the trillion-dimensional space, it’s something which is very very hard for us as human beings to grasp, there is a lot that you can do with a trillion parameters.

LLMs have revolutionized various sectors such as natural language processing, content generation, and even virtual assistants. In the case of RoboDK’s Virtual Assistants, LLMs play a vital role in enabling advanced conversational abilities, allowing them to understand more complex queries and respond with coherent and contextually relevant information. This breakthrough technology has unleashed the potential of large language models in the robotics industry, paving the way for more efficient and natural human-robot interactions.

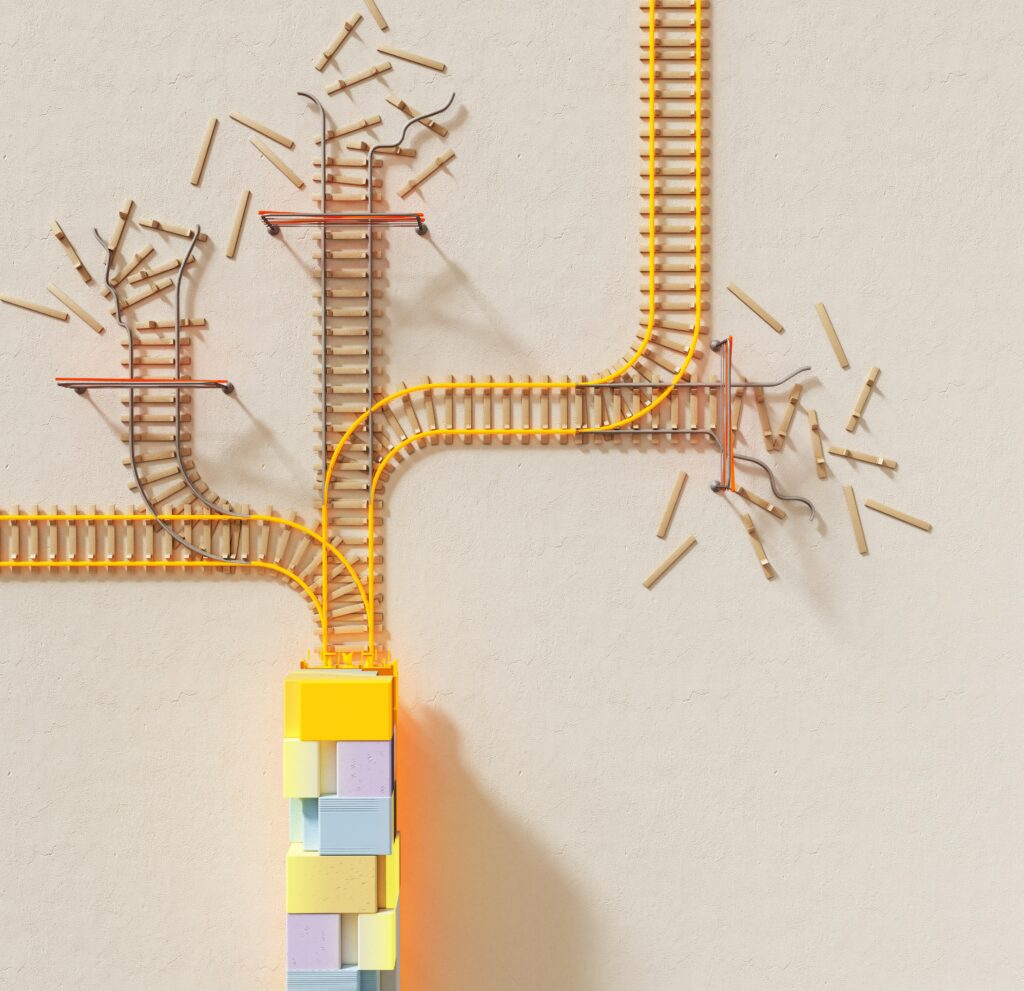

Despite their advancements, large language models (LLMs) face certain limitations and challenges. Biases in training data can propagate into the models, leading to biased and unfair responses. LLMs may also struggle with detecting and understanding contextual cues, resulting in responses that lack nuance or rely on spurious patterns in the data. Moreover, there is a growing concern regarding false responses and misinformation generated by AI models, which can have significant implications for users who rely on AI-generated content. It is crucial to exercise caution and implement measures that ensure proper fact-checking and human review of the outputs from LLMs. Understanding and addressing these challenges is essential to harnessing the capabilities of large language models while mitigating potential risks.

RoboDK’s Virtual Assistant

Now that we have explored the world of Large Language Models (LLMs), let’s shift our focus to the RoboDK Virtual Assistant. This Virtual Assistant is the first step towards a comprehensive generalized assistant for RoboDK. At its core is OpenAI’s GPT3.5-turbo-0613 model. The model is provided with additional context about RoboDK in the form of an indexed database containing the RoboDK website, documentation, forum threads, blog posts, and more. The indexing process is done with LlamaIndex, a specialized data framework designed for this purpose. Thanks to this integration, the Virtual Assistant can swiftly provide valuable technical support to over 75% of user queries on the RoboDK forum, reducing the time spent searching through the website and documentation via manual methods. Users can expect to have an answer to their question in 5 seconds or less.

As remarkable as the RoboDK Virtual Assistant is, it still has limitations when compared to a human assistant. Despite its usefulness, there is no variation in how the model will respond to the same question (the so-called model temperature is 0, this means we don’t allow randomness to the answers). Its performance in math-related queries is subpar, and it lacks conversational memory or web-search capabilities. Additionally, in some cases, users may need to rephrase their queries to receive an adequate response. Understandably, these limitations can lead to frustration in certain situations. To overcome this, RoboDK is already exploring alternatives like langchain.

Langchain aims to overcome many of the challenges faced by LLM-powered applications. By leveraging agentic and data-aware models, langchain breaks free from most of the previously mentioned limitations. Imagine an AI assistant that not only understands your questions but can also break them down into tasks, utilize tools like calculators, scour the web for relevant information, and engage in troubleshooting conversations with you.

Moving forward, the integration of this virtual assistant directly into RoboDK holds great promise. By providing context awareness and a deep understanding of the software, settings, and current station in use, the assistant can become an even more invaluable asset. Moreover, there has been a rise in models specifically fine-tuned to facilitate code writing. Consequently, the assistant can employ these models, enabling users to automate programming tasks by simply describing desired behaviors in natural language. This level of accessibility to robotics and automation is set to make a significant impact.

As we wrap up our exploration of large language models and the RoboDK Virtual Assistant, there is no doubt that the potential of AI in robotics is expanding at a remarkable pace. The RoboDK Virtual Assistant provides users with a valuable tool to save time and assist with complex tasks, showcasing the power of AI-driven technology. However, it is important to remain mindful of the ethical implications and limitations of large language models. Let’s continue to stay informed about the latest advancements in AI, robotics, and RoboDK, and engage in open conversations to ensure a responsible and beneficial integration of these technologies into the manufacturing industry.

What questions do you have about RoboDK’s Virtual Assistant? Tell us in the comments below or join the discussion on LinkedIn, Twitter, Facebook, Instagram, or in the RoboDK Forum. Also, check out our extensive video collection and subscribe to the RoboDK YouTube Channel.